The problem

Bring one of the world's largest image recognition ML models to the hands of as many people as possible. Enable them to identify plants in real time. Build a global map of every plant on the planet.

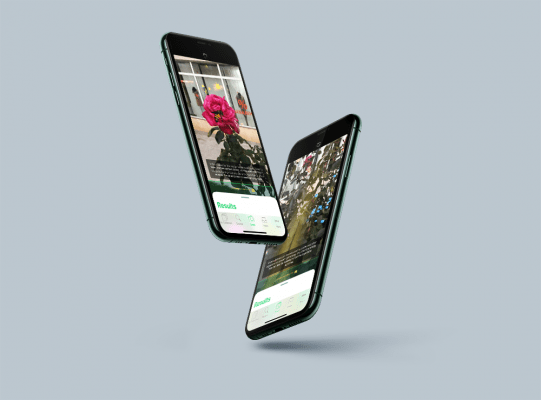

The solution

Design and build custom native applications. A Swift native application for iOS. A Kotlin native application for Android. Both of them leverage a mobile app backend.

The result

PlantSnap has more than 40 million installs and an average rating of 4.4 globally. Nearly 15 million user plant entries and more than 100 million plant identifications.

Tech stack

Swift

Kotlin

CoreML

MLKit

Figma

How to make high-tech AI usable by everyone?

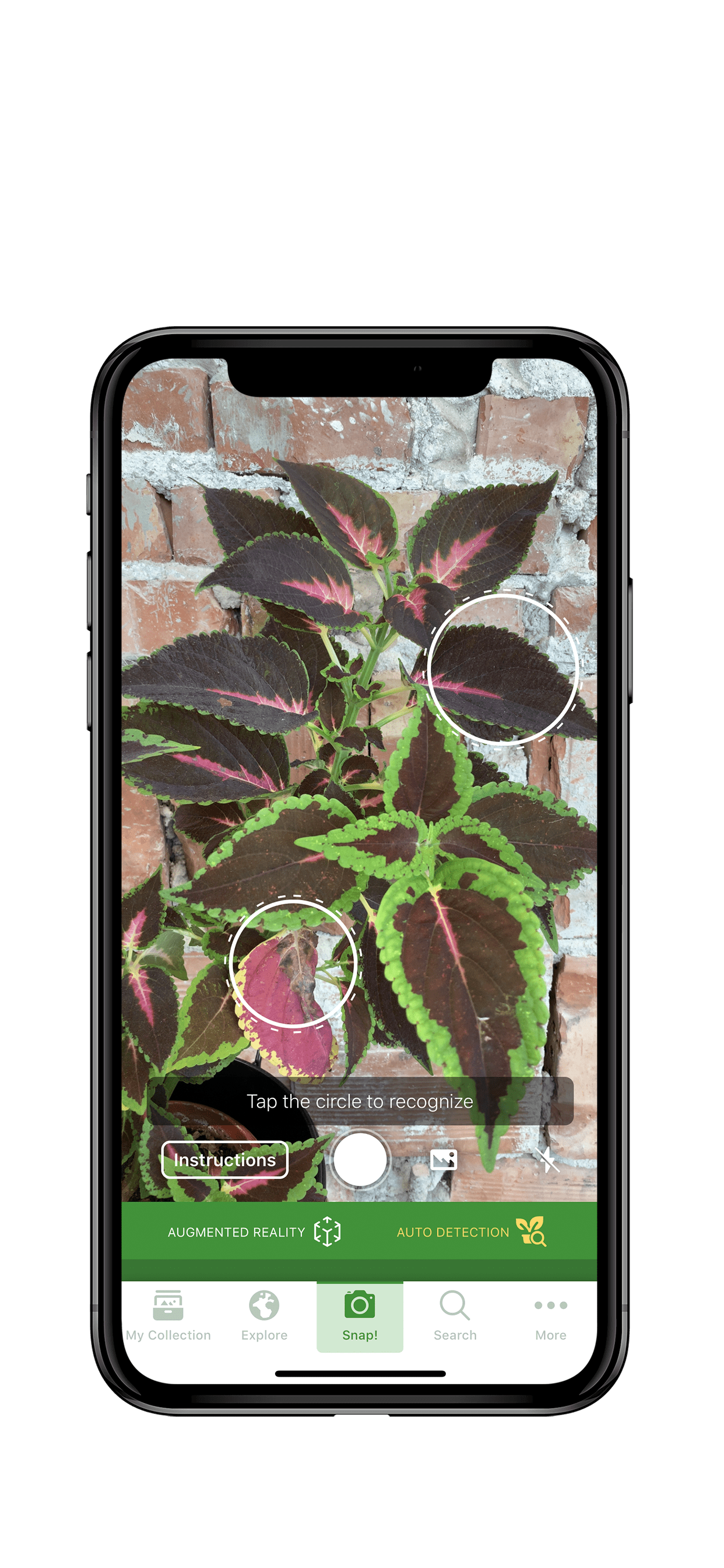

We started by building a custom camera solution for taking photos and preparing them for image recognition. We added some guides for the users and this worked well initially. But as the image recognition model was getting more complex, it was becoming more difficult to match the user images to the ML model.

It was incredibly challenging and we tried multiple approaches, but the one that actually made a big difference was integrating a custom plant detection model, which runs on device.

Other features

USER PROFILE

USER COLLECTION

EXPLORE MAP

PLANT SEARCH

USER AUTHENTICATION

PUSH NOTIFICATIONS